Beginning before extraction

Most AI ethics conversations begin after the harm.

After the dataset is assembled. After the model is shipped. After the apology is drafted.

This essay begins earlier: at the moment a system decides what data is — property to be owned, or relationship to be stewarded.

Because the problem isn't only bias. It's accountability.

AI systems keep repeating the same harms because the architecture is built for launch and closure, not memory and return.

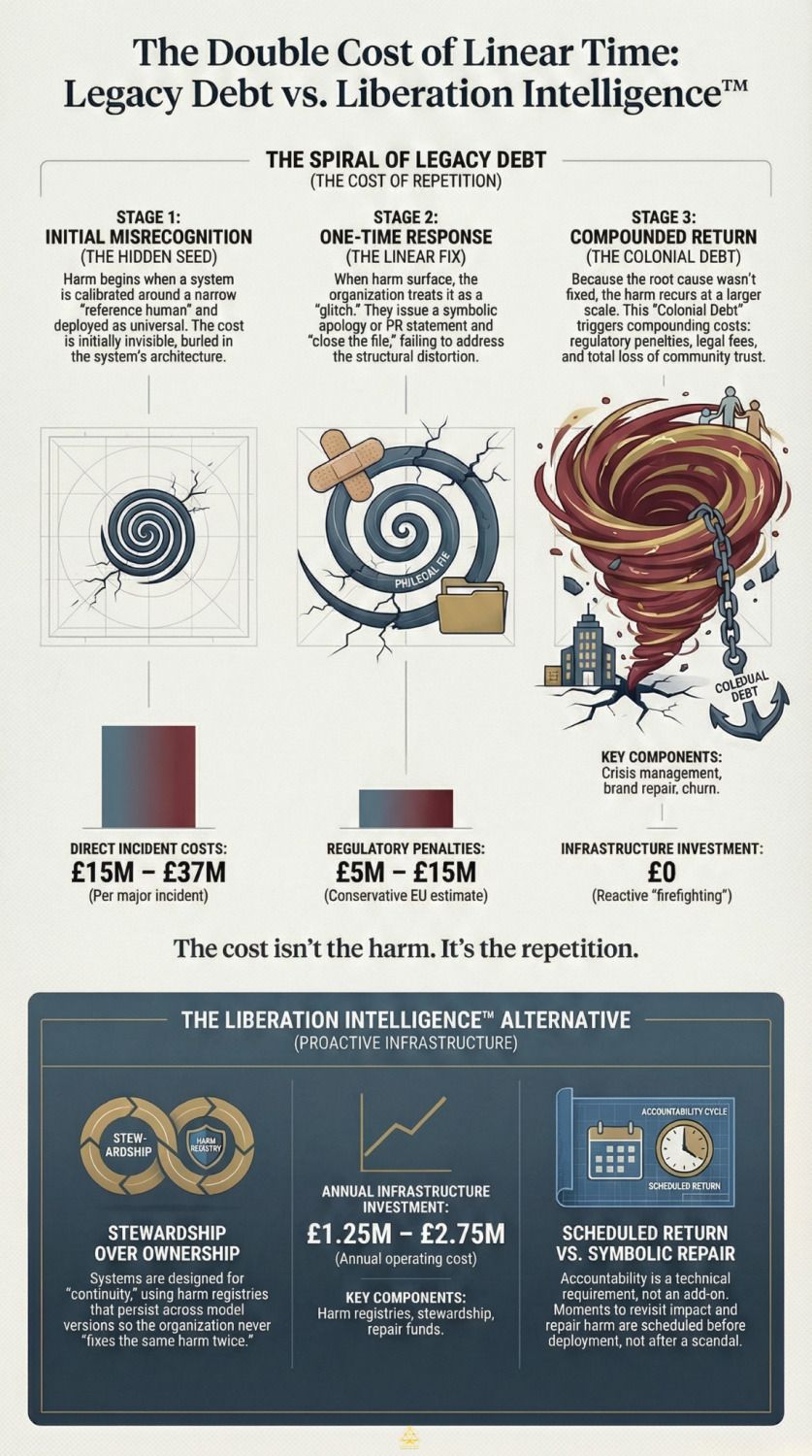

Here's the uncomfortable balance-sheet version: a single major AI incident can cost a mid-sized company £15M–£37M in crisis response, fines, attrition, and trust loss. Building accountability infrastructure costs a fraction of that annually.

So this isn't a moral add-on. It's organisational survival.

The question is not "who owns the code?" It's who remains responsible for it — six months, two years, three versions later.

This essay is written to be returned to. Not read once and agreed with, but revisited when a system fails, a harm resurfaces, or a familiar apology is issued again.

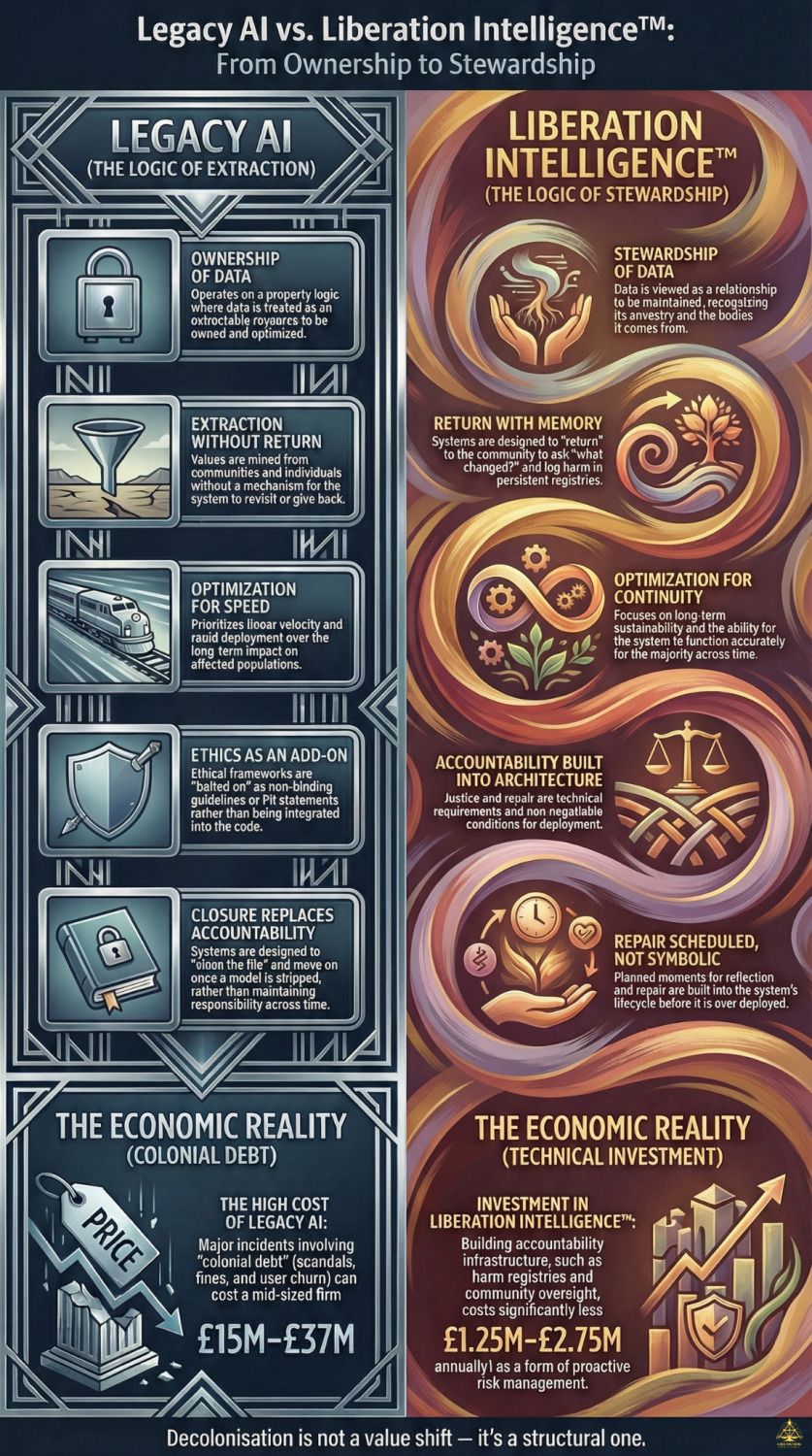

The economics of extraction versus stewardship

Before philosophy, the balance sheet.

Scenario: Mid-sized AI company, 500 employees, 10 million users.

Cost of one legacy AI failure:

Crisis management (scandal, legal, brand repair): £2M

Regulatory penalties (EU AI Act estimate): £5M–£15M

Attrition (10 senior engineers): £300k + knowledge loss

User churn (15% among affected communities): £8M–£20M

Total: £15M–£37M per major incident

Cost of proactive accountability infrastructure:

Harm registries + consent systems + return audits: £500k–£1.5M/year

Community oversight + co-design: £250k–£750k/year

Repair fund: £500k/year

Total: £1.25M–£2.75M annually

This is an insurance premium. Spend £2.5M now to avoid £30M later.

Technical debt vs colonial debt

Most organisations understand technical debt. Ship quick code, pay later with bugs and rewrites.

Legacy AI operates the same way — but the debt is cultural, relational, and political.

Every time a system extracts without consent, optimises without return, or deploys without accountability, it takes out colonial debt.

The interest isn't just bugs. It's community backlash, regulatory scrutiny, legal liability, reputational collapse, and loss of trust that cannot be rebuilt with PR.

By the third incident, repair costs are often ten times what proactive infrastructure would have cost.

Decolonising AI is not ethics versus velocity. It is long-term sustainability versus short-term extraction that collapses under its own weight.

The structural pattern: extraction without return

When this essay uses "colonial," it's naming a structural pattern — extraction without return, optimisation without accountability, ownership without relationship — not accusing individual intent.

Modern AI inherits logics from:

Land enclosure (common resources as private property)

Census classification (people reduced to administrative categories)

Surveillance capitalism (attention as extractable resource)

When systems treat data as "raw material" waiting to be refined, that's not neutral. That's inherited extraction logic.

When consent is a checkbox buried in terms and conditions, that's not consent. That's extraction with paperwork.

When algorithms optimise for engagement without accountability for harm, they're designed to forget who they affect.

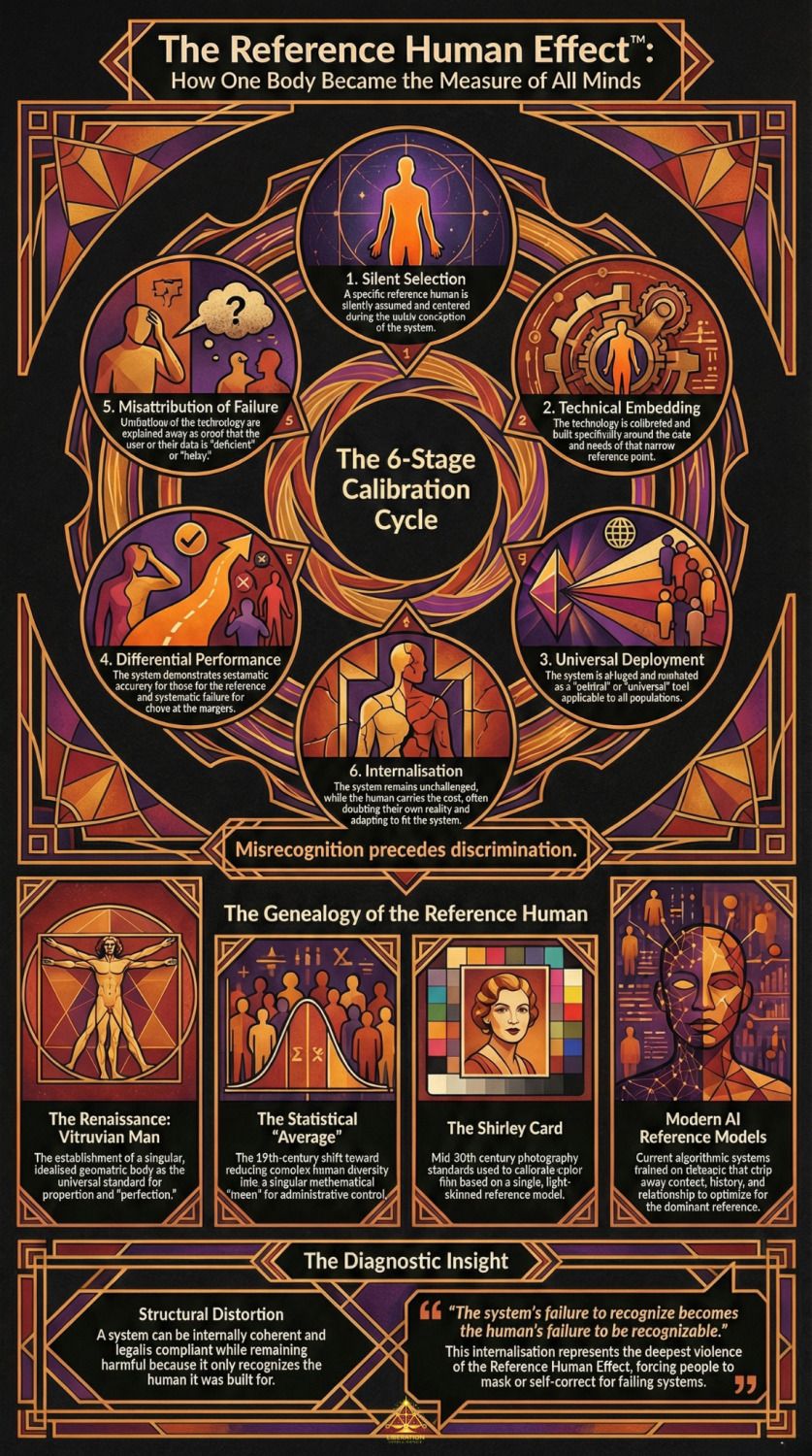

Misrecognition precedes discrimination

Here's the diagnostic shift that changes everything.

Systems don't begin by harming people. They begin by failing to recognize their reality accurately — because they were never calibrated to see it.

Discrimination is how harm appears. Misrecognition is how harm becomes inevitable.

Or in engineering terms: your model is hallucinating a universality that doesn't exist. It's overfitting to a narrow demographic.

This isn't a moral failing. This is low-fidelity engineering.

Not unwilling — systemically trapped

This is not about individual malice.

Most engineers are not consciously building harmful systems. Most organisations do not wake up and decide to extract value from vulnerable communities.

What's happening is systemic blindness.

The system was calibrated to a specific reference human — historically white, male, Western, able-bodied — and that calibration becomes invisible to those it serves.

They experience the system as logical, fair, just how things work.

They are not lying. They genuinely do not see the calibration — because it was designed to be invisible to them.

This is not a sin the engineer is committing. It is a trap they are caught in.

The reference human effect limits their view of reality too. It constrains their ability to build systems that work accurately for the actual diversity of human experience.

So the call to action is not repentance. It's debugging: debugging false universality, debugging invisible defaults, debugging the assumption that your reference population represents everyone.

This bypasses identity politics and goes straight to the problem-solving frame engineers are trained for.

The calibration cycle: why harm keeps returning

Imagine you're a V-team engineer. You spent three weeks fixing a bias issue in your hiring algorithm. You close the JIRA ticket, ship the patch, move on to the next sprint.

Six months later, the exact same problem appears in a different feature.

Why? Because no one went back to fix the root cause. You patched the surface symptom.

This is the frustration of linear bug-fixing. Every engineer knows this pattern.

Across domains — photography, medicine, hiring algorithms — the same pattern repeats:

Stage 1: A reference human is silently assumed

Stage 2: Systems calibrated around that reference

Stage 3: System deployed as "universal"

Stage 4: Performance differs by proximity to reference

Stage 5: Failure blamed on the human, not the system

Stage 6: Reference remains unnamed and unchallenged

This is not random error. This is systematic accuracy for some, systematic failure for others.

The system explains its own limitations as proof the user is deficient.

This is why we need Spiral Time

Linear systems optimize for launch and closure. Ship the model, close the ticket, move on to version 2.0.

Spiral systems are designed to return. They schedule checkpoints — 2 weeks, 6 weeks, 12 weeks after deployment — to ask:

What changed?

Who was harmed?

What needs repair?

Return is not retrospective. It is scheduled before deployment.

This isn't philosophy. It's a lifecycle model that prevents the "patch and pray" approach that causes harm to recur.

Ownership is the wrong question

Most AI debates centre on ownership: Who owns the data? Who controls the model? Who profits?

These are industrial questions, not ethical ones.

Ownership assumes data is property. It assumes that once something is "owned," accountability ends.

A stewardship frame asks different questions:

Who is accountable across time?

Who carries harm when systems fail?

Who has the right to withdraw, revise, or return?

Ownership closes the file. Stewardship schedules return.

Engineering translation

Legacy AI: Transaction-based architecture Liberation Intelligence: Relationship-based architecture

When you treat data as property, you build systems to extract value and move on.

When you treat data as relationship, you build systems to remember, repair, and return.

The first produces velocity without accountability. The second produces accountability that holds over time.

That's the shift: from systems that ask "Can we close this?" to systems that ask "What changed when we returned?"

What I call Liberation Intelligence™ is architectural redesign — systems that refuse to operate without memory, justice, and return built into their foundation.

This is not a think piece. It is a reference frame for teams tired of fixing the same harm twice.

The Blueprint: Ancestry, Justice, Return

If ownership is the wrong frame, what replaces it?

Not regulation alone. Not ethics boards. Not better bias audits.

What replaces it is infrastructure — built to hold memory, justice, and return as non-negotiable conditions for deployment.

I call this The Decolonial AI Blueprint™, and it rests on three principles:

1. Ancestry

Data comes from bodies, histories, and places. That context must remain visible.

Engineering specification:

Every dataset should travel with a minimum viable provenance manifest — teams can implement it as a schema even if governance maturity is low.

{

"origin_community": "Community name or description",

"consent_model": "aggregated | individual | public_domain",

"steward_contact": "[email protected]",

"collection_date": "2024-01-15",

"intended_use": "Medical diagnosis training",

"prohibited_uses": ["Surveillance", "Profiling"],

"withdrawal_process": "request logged; training inclusion reviewed; unlearning pathway depends on model class"

}

This is not a wish. This is a spec. If an engineer can visualize the schema, they're halfway to believing it's possible.

2. Justice

Harm must be repairable, not just documented.

Engineering specification:

Harm registries that persist across model versions. Think of it as a new ticket type:

Harm Registry Entry:

harm_id: Unique identifier

model_version: Which version caused the harm

affected_population: Demographic or community impacted

harm_description: What happened

mitigation_status: [logged | investigating | resolved | recurring]

resolution_date: When repair completed (if applicable)

carry_forward: true (log travels to next version)

Every time you retrain or update, the harm registry is mandatory reading. You cannot ship version 4.0 without accounting for harms logged in versions 1.0–3.0.

The objection: "But data withdrawal is impossible!"

Yes, it's incredibly difficult. Unlearning specific data from a trained model often requires retraining from scratch, which costs millions in compute.

Some of what I'm proposing is technically hard — withdrawal, unlearning, persistent harm registries. In places, it's still an emerging field.

But "hard" is not the same as "optional."

If an architecture cannot support withdrawal, memory, or return, it isn't finished — it is unfit for deployment in human systems.

You don't build a bridge and say "physics makes it hard to add safety rails, so we don't have them." You build the safety rails into the design or you don't build the bridge.

This is a design threshold, not an impossible demand.

3. Return

AI systems must be designed to revisit impact, not just ship outputs.

Practical implementation:

Before shipping any feature or model update, ask this in your Monday standup:

"If this causes harm in six months, do we have infrastructure to return — or will we just issue an apology and move on?"

If the answer is "we'll handle it when it happens," you are building legacy AI.

If the answer includes:

A scheduled return checkpoint (6-week, 12-week audit)

A harm registry entry prepared in advance

A community contact point for feedback

You are building toward Liberation Intelligence.

This blueprint is not anti-technology. It is anti-amnesia.

It refuses the idea that innovation requires forgetting who systems are built on. It insists that if AI cannot answer "What changed when we returned?", it is not ready for deployment.

That is the threshold. Not capability. Accountability.

Why "ethical AI" keeps failing

If the need for ethical AI is so widely acknowledged, why does harm keep recurring?

Ethical AI keeps failing not because people don't care — but because care was never given infrastructure.

Most ethics frameworks:

Sit outside system architecture

Are advisory, not binding

Expire when leadership changes

Do not survive contact with scale

Treat harm as a PR problem, not a structural one

Ethics without stewardship becomes branding. Stewardship without return becomes control.

The crucial distinction most frameworks miss:

A bias audit can confirm the system is consistent, without proving it's correct.

Bias audits fail to prevent recurrence. "Fairness" metrics get gamed. Diverse hiring in AI labs does not translate to less harmful systems.

Because the architecture itself remains unchanged.

Decolonising AI is not about better ethics. It is about building systems where memory, justice, and return are technical requirements — not aspirational add-ons.

The "we can't afford to slow down" objection

The predictable response: "We're in a race. If we pause to build harm registries, our competitors will crush us."

Let's address this directly.

First: The market reality

If you build a product that only works well for a narrow demographic, you are limiting your own total addressable market.

Designing for multiplicity — ensuring your tech works for everyone, not just wealthy Western users — is not ethics charity. It is better product-market fit. It is statistical accuracy.

If your model can't recognize 40% of the global population, that's not a values problem. That's a quality problem.

Second: The startup objection

"A 3-person startup with £2M in the bank can't budget £2M for community oversight."

Fair point. But you don't need to build the entire infrastructure on day one.

What you need is the design principle from the start:

Store data with provenance metadata (cost: negligible)

Log harms as they're reported (cost: negligible)

Ask "What changed when we returned?" before shipping (cost: one meeting)

The costly parts (community boards, formal audits, repair funds) scale with your revenue.

A £2M startup implements lightweight versions. A £200M company implements full infrastructure.

The architecture is the same. The resource allocation scales.

Third: The velocity paradox

Moving fast and breaking things only works if you're not breaking people.

When you break code, you patch it and move on. When you break trust, communities, and lives, the repair cost compounds exponentially.

Colonial debt interest rate > technical debt interest rate.

So the real question is: Can you afford NOT to build accountability infrastructure?

The human cost: when systems fail, bodies remember

When systems fail, people don't usually leave. They adapt. They mask. They self-correct.

Over time, the system's failure becomes internalised as personal deficiency — and the human carries the cost the system refuses to name.

This is not metaphor. This is measurable:

People with darker skin learn "I'm not photogenic" when cameras fail them

Neurodivergent people learn "I'm not trying hard enough" when educational systems misread them

Women learn "maybe it's just stress" when medical systems dismiss their pain

Black defendants receive longer sentences when "objective" algorithms encode historical bias

The system's failure to recognize becomes the human's failure to be recognizable.

And they stop trusting themselves.

This is epistemological colonialism. This is the deepest violence of the Reference Human Effect™: it makes you doubt your own reality.

What you can do Monday morning

This essay should not end as inspiration without application.

Here is the simplest move an AI team can make immediately:

The Accountability Question

In your next product meeting, before shipping a feature or model update, ask:

"If this causes harm six months from now, do we have infrastructure to return — or will we just issue an apology and move on?"

That question costs nothing. It requires no executive approval. But it shifts the frame from performance to responsibility.

Echo line

“Accountable AI is not about slowing innovation.”

It is about stopping innovation that forgets who it is built on — and ensuring that the systems we create are accountable not just at launch, but across time.

The future of AI is not who owns the code. It is who remains responsible for it.

The call to action is not repentance. It is debugging: debugging false universality, debugging invisible defaults, debugging the assumption that your reference population represents everyone.

Designing for multiplicity is not inclusion for the margins. It is statistical accuracy for the majority.

The person closest to the dominant reference is a numerical minority — yet they remain the calibration authority.

Liberation Intelligence™ is what that responsibility looks like when it becomes architecture.

If this essay stirred something, you don't need to resolve it now

You don't need to follow a link to honour this work. Sitting with the question is already participation.

If you want gentle orientation, the Canon Primer™ offers context without commitment.

If this raised questions about AI you're building or deploying, Continuity Without Surveillance™ explores ethical infrastructure.

And if you're exhausted from watching systems harm the communities you serve, the Coherence Test™ can help you see whether the issue is personal — or structural.

Everything else can wait.

🌀 Saige Jarell (Kwabena) Bempong Founder, The Intersectional Majority Ltd Architect of ICC™, Bempong Talking Therapy™, and Saige Companion™ AI Citizen of the Year (2025)

About the author

Jarell (Kwabena) Bempong is a systems thinker, therapist, and AI ethics architect working at the intersection of identity, power, and repair. He is the founder of The Intersectional Majority Ltd and the creator of Bempong Talking Therapy™, Intersectional Cultural Consciousness™ (ICC™), and Saige Companion™ — a consent-bound AI continuity framework designed to support reflection without surveillance.

His work focuses on how modern systems reproduce harm through structural amnesia, and how repair becomes possible when memory, accountability, and return are designed into infrastructure rather than demanded from individuals. Jarell's frameworks are used across mental health, leadership, technology, and organisational design contexts, without pathologising survival or extracting vulnerability as data.

He is the author of The Intersect™, a weekly essay series on systems, identity, and liberation; AI Citizen of the Year (2025); and an award-winning innovator in culturally grounded, trauma-aware systems redesign.

🔮 Next in The Intersect™

AI for Planetary Liberation: Tech That Protects Ecosystems and Equity

The next question is unavoidable: what is AI responsible for sustaining — beyond humans alone?