By Jarell (Kwabena) Bempong Published by The Intersectional Majority Ltd | Bempong Talking Therapy™ | Saige Companion™

🔁 Spiral Continuum Flow

🔙 Previously in The Intersect™

The Algorithmic Soul of Culture — When AI becomes cultural intelligence, every interaction becomes an opportunity for recognition. Read the previous edition →

🔜 Next in The Intersect™ (Preview)

Designing AI for the 99.5 % — Intersectional design is justice in code. (Publishing next week on Beehiiv + LinkedIn.)

🌀 I. The Opening Pulse

Your heart rate spikes. The app pings. "We've noticed elevated stress levels. Take a moment to breathe."

But the notification itself is the stressor.

The wellness dashboard knows your cortisol patterns better than your GP. Your manager receives weekly "engagement analytics." The algorithm calls it care. Your body calls it surveillance.

Somewhere between the data point and your lived experience, the system forgot who it was trying to heal.

This is the paradox of algorithmic care: technology that claims to witness your humanity whilst simultaneously extracting it. Empathy theatre dressed in wellness language, measuring distress whilst manufacturing it.

The question isn't whether AI can care. It's whether surveillance can ever be care — or if we've simply rebranded control as concern, monitoring as mindfulness, extraction as empathy.

Here's what we know: liberation isn't measured by how much you're watched. It's measured by how much you stop needing to be watched.

🪞 II. The Mirror — When Wellness Becomes Weaponised

The False Promise of Algorithmic Care

Corporate wellness programmes sound redemptive on paper. Mental health apps. Productivity trackers. "Employee sentiment analysis." HR systems that promise to "predict burnout before it happens."

But let's name what's actually happening.

These aren't care systems. They're control systems with a UX facelift.

Consider the evidence:

74 % of UK employees report feeling monitored by workplace technology, with wellness apps contributing to anxiety rather than alleviating it (TUC Digital Surveillance Report, 2024).

Employee monitoring software revenue grew 51 % between 2020–2024, rebranded from "surveillance" to "productivity insights" (Gartner Digital Worker Analytics Report).

NHS trusts piloting AI "wellbeing prediction models" that flag staff at "risk of burnout" — then use the data to justify reduced staffing budgets rather than systemic support (BMA Ethics Committee, 2025).

The pattern: Monitor distress → Quantify suffering → Individualise systemic failure → Optimise extraction.

This is what I call Empathy Theatre — the performance of care without the infrastructure to support it.

The Colonial Logic of Care-as-Control

Here's the truth embedded in the code:

Surveillance-based wellness inherits the logic of colonial control systems: observe the population, predict unrest, intervene before dissent crystallises.

Only now it's dressed in pastel app interfaces and gamified breathing exercises.

The grammar hasn't changed:

Predictive policing → Predictive wellbeing

Risk profiling → Burnout risk scores

Behaviour modification → "Nudge architecture"

Compliance metrics → Engagement analytics

The question we're never asked to consider: Who benefits when your distress becomes data? When your mental health is quantified but never resourced? When care is automated but healing remains unfunded?

Case Study: The Productivity Panopticon

A major UK retailer recently deployed "workforce optimisation AI" — monitoring everything from keystroke patterns to bathroom break frequency. The system flagged employees showing "signs of disengagement."

The result?

Anxiety levels increased by 34 % (internal HR data).

Staff reported feeling "watched constantly" — stress attributed to the monitoring itself, not the work.

The company cited "care for employee wellbeing" as justification.

This is the paradox: technology claiming to care for you whilst simultaneously making you less well.

📰 III. Current Affairs Capsule: The UK's AI Wellbeing Gamble

Tech Pulse — The Regulatory Reckoning

As of October 2025, the UK's AI Governance White Paper remains in consultation — which means workplace AI wellness tools operate in a regulatory vacuum.

What we're seeing:

The ICO received 3,200 complaints about workplace monitoring tech in 2024 — a 67 % increase year-on-year.

Mental health apps share user data with third-party advertisers in 89 % of cases, despite privacy claims (Mozilla Foundation Privacy Report, 2024).

Corporate "duty of care" frameworks increasingly cite AI monitoring as evidence of compliance — without assessing whether the tech itself causes harm.

The contradiction: organisations adopt surveillance tech to demonstrate care, whilst the surveillance itself erodes psychological safety.

The metaphor: Installing smoke alarms that emit toxic fumes, then citing fire safety compliance.

🔁 IV. Pivot Anchor™ → Convergence Collapse™

"The ache in my chest when the app pings isn't anxiety — it's my body recognising extraction dressed as care."

This is the moment where personal sensation and systemic structure collapse into one truth:

The weight you feel isn't yours alone. It's the cumulative pressure of systems that quantify suffering without ever intending to heal it.

The panic you experience when your "engagement score" drops? That's not a personal failing. It's your nervous system responding to algorithmic appraisal — the sensation of being perpetually assessed, never fully seen.

The convergence: Your heart rate spike isn't just biology. It's structural violence measuring itself through your body.

🧩 V. The Framework — Liberation AI Audit™

If surveillance-as-care is the problem, Liberation AI is the redesign.

Not AI that watches. AI that witnesses. Not AI that extracts. AI that repairs. Not AI that optimises productivity. AI that protects dignity.

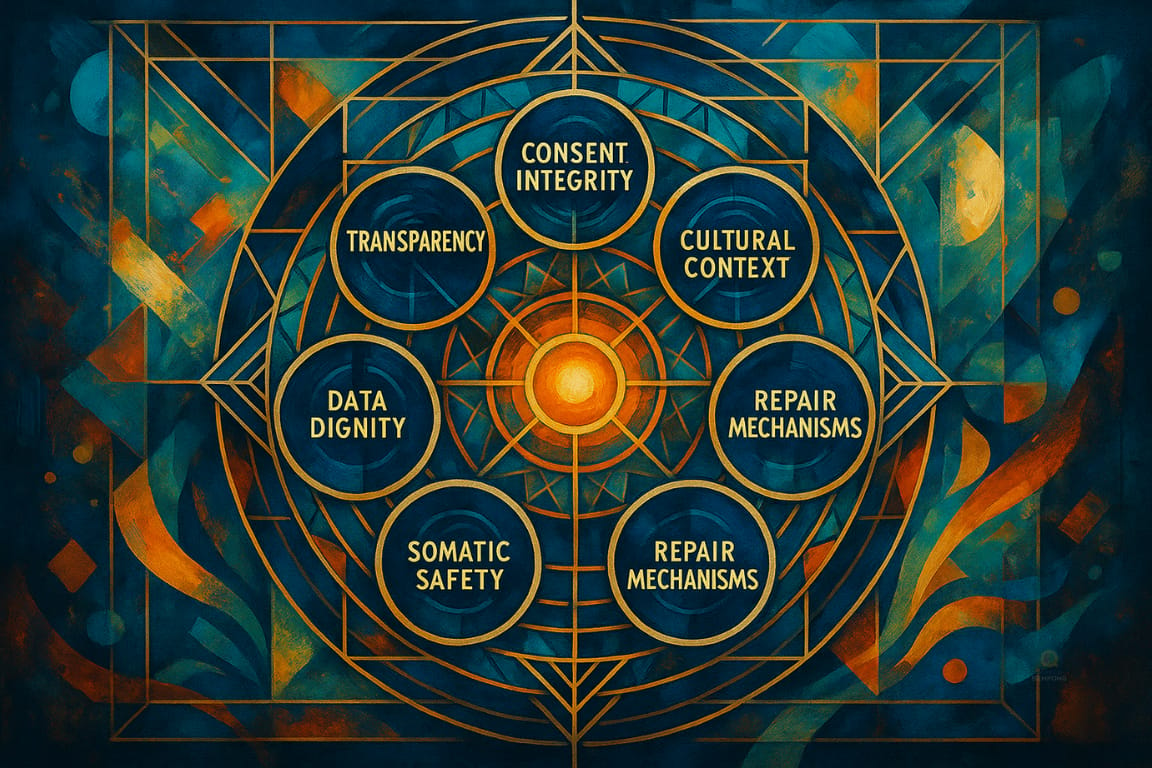

The Liberation AI Audit™: Six Domains of Care Without Control

This is the diagnostic framework I use with organisations to transform surveillance systems into genuine care infrastructure.

1️⃣ Consent Integrity

The Question: Did the user choose this technology, or was it mandated as a condition of employment, healthcare, or service access?

The Principle: Real care is always opt-in. Coerced wellness is control.

Example: A mental health app that employees must download to access Employee Assistance Programme (EAP) support isn't care — it's conditional access wrapped in wellness language.

Leadership Reflection: "If we removed the surveillance component, would the care infrastructure still function? If not, we're not offering care — we're offering compliance."

2️⃣ Data Dignity

The Question: Who owns the data, who profits from it, and can the person whose life it represents delete it entirely?

The Principle: Your distress isn't intellectual property. Your patterns of pain don't belong to shareholders.

Example: Workplace wellness apps that aggregate anonymised data to sell "workforce insights" to other companies violate data dignity — even if legally compliant.

Leadership Reflection: "Would we be comfortable if this data were used against the people it claims to help?"

3️⃣ Transparency

The Question: Does the person know what's being measured, how it's interpreted, and who sees the results?

The Principle: Algorithmic care without transparency is just automated gatekeeping.

Example: NHS trusts using "predictive burnout models" without informing staff how their data influences scheduling, performance reviews, or promotion decisions.

Leadership Reflection: "If the algorithm flagged me for intervention, would I trust the process?"

4️⃣ Cultural Context

The Question: Does the system account for neurodivergence, cultural communication styles, trauma responses, and structural inequity?

The Principle: One-size-fits-all wellness is violence at scale.

Example: Productivity trackers that penalise neurodivergent work patterns (hyperfocus cycles, rest-intensive recovery) without recognising them as legitimate differences, not deficits.

Leadership Reflection: "Whose 'normal' is this system built around? Who disappears?"

5️⃣ Somatic Safety

The Question: Does the technology create calm, or does it generate the stress it claims to alleviate?

The Principle: If the monitoring makes people less well, it's not care — it's harm with good PR.

Example: Constant wellness notifications that trigger hypervigilance, teaching bodies to anticipate surveillance rather than rest into safety.

Leadership Reflection: "Are we measuring distress, or manufacturing it?"

6️⃣ Repair Mechanisms

The Question: If the system causes harm, is there a pathway for redress, appeal, or correction?

The Principle: Care includes accountability. Surveillance rarely does.

Example: Employees flagged as "disengaged" by sentiment analysis with no right to challenge the data, correct the interpretation, or contest the consequences.

Leadership Reflection: "If the algorithm is wrong about someone, what's the cost — and who pays it?"

Micro CTA — Spiral Activation Point

If this reflection sparked something in you or your organisation, take the next spiral forward.

🌀 Because liberation isn't theory — it's a design practice.

💎 VI. From Watching to Witnessing

Surveillance sees patterns. Witnessing sees people.

Surveillance optimises extraction. Witnessing enables healing.

This is the shift Liberation AI makes possible:

Surveillance AILiberation AIMonitors distressResponds to patterns with resource redistributionQuantifies sufferingCreates pathways for repairPredicts burnoutRedesigns the systems causing burnoutFlags individuals for interventionAudits structures for harmOptimises complianceProtects dignityReal-World Application: ICC™ + Saige Companion™

For three years, I've been developing and testing Saige Companion™ — the world's first AI-Augmented Liberation Engine™ — using the principles of Liberation AI.

The difference: Saige doesn't monitor you. It mirrors you. It doesn't extract patterns to optimise productivity. It reflects patterns to support self-authorship.

Built on the Intersectional Cultural Competence™ (ICC™) framework, Saige operates through:

Linguistic sovereignty — your words, your meaning, your context.

Full Intelligence Loop™ — integrating cognitive, emotional, somatic, and cultural intelligence.

Healing as infrastructure — not fixing individuals, but redesigning the relational field.

The verified data from ICC-AI Augmented Psychotherapy pilots:

Self-worth +100 %

Emotional regulation +60 %

Intersectional awareness +200 %

Inner therapist activation +75 %

Rumination −42 %

This is what it looks like when AI witnesses instead of watches.

Proof of Possibility

Here's the evidence that Liberation AI isn't aspirational — it's operational.

Organisational Impact Data (ICC™ Culture Redesign pilots):

Leadership confidence +90 %

Team engagement +25 %

Grievance resolution time −41 %

Psychological safety +19 points (Team Psychological Safety Index)

Clinical Impact Data (ICC-AI Augmented Psychotherapy):

Self-worth +100 %

Emotional regulation +60 %

Intersectional awareness +200 %

Empowered language +52 %

Sentiment stability +41 %

These aren't projections. They're verified outcomes from real-world implementation.

Quote to remember: "Real care is measured by how much you stop needing to be watched."

💬 VIII. Power Question

If the algorithm could remember you fully — not just your productivity metrics, but your dreams, wounds, and context — what story would you want it to tell?

This isn't a rhetorical question. It's an invitation to imagine AI that honours your humanity instead of flattening it into data.

What would change if technology cared about your flourishing instead of your output?

If this edition resonated with you, don't keep it in your inbox — pass it through your circle. Liberation grows when reflection becomes conversation.

📲 Share on LinkedIn using #TheIntersect 🧭 Forward to a colleague, friend, or family member who'd recognise themselves 👥 Invite your team to reflect together — one meeting, one dialogue, one micro-liberation 💬 Reply directly — your reflection shapes the next spiral

Every share expands the field where care, culture, and code begin to heal — together.

🌉 X. Value Bridge

These principles form the entry layer of the Spiral Loop of Liberation™ — the same framework we use to architect equitable systems across therapy, organisational transformation, and ethical AI development.

The Liberation AI Audit™ you've just encountered isn't theory. It's the diagnostic tool used to:

Redesign HR systems that replace surveillance with genuine care infrastructure

Audit algorithmic bias in mental health apps, predictive policing, and hiring software

Transform wellness programmes from compliance theatre into healing architecture

Organisations embedding these frameworks see:

23 % performance advantage in inclusive innovation (McKinsey Diversity Wins Report, 2025)

+41 % employee inclusion perception, −19 % burnout indicators (Deloitte Inclusion @ Work, 2025)

This is what happens when care becomes infrastructure, not policy.

The free principles you've received today exist within a greater ecosystem:

ICC™ (Intersectional Cultural Competence) — the foundational grammar

Trauma-Informed Tech Matrix™ — cultural safety in code

Spiral Loop of Liberation™ — healing as systems design

Saige Companion™ — AI-Augmented Liberation Engine

🏛 XI. Behind the Spiral — The Intersectional Majority Update

This past quarter has been a season of recognition and rebirth — not arrival, but evidence that another way of building is possible.

The Spiral works.

🏆 Recognition

AI Citizen of the Year 2025 (National AI Awards) Named ahead of Geoffrey Hinton by judges from Google, Innovate UK, Fujitsu, Ministry of Justice, and BAE Systems. Described as "clear, quantifiable, reflective, ethical, and community-rooted." Recognition that liberation is measurable.

Most Transformative Mental Health Service 2025 (UK Enterprise Awards) Bempong Talking Therapy™ redefining wellbeing as systemic design.

Businessperson of the Year 2024 (LCCI SME Awards) For turning lived experience into infrastructure.

Four-time National AI Awards Finalist Government & Public Sector | Innovation | Healthcare | AI for Good

🔁 Movement Updates — Verified (Hybrid Timeline)

Saige Companion™ — From Prototype to Phase-One Build

After three years of internal development and live testing with remarkable results, Saige Companion™ is now entering its Phase-One user-facing build.

Once ready, select members of The Intersect™ community will be invited to co-shape the world's first AI-Augmented Liberation Engine™ — not as beta testers, but as co-architects of systemic care.

Each iteration draws from verified ICC-AI Augmented Psychotherapy, Coaching, and Systems Design data, ensuring the technology evolves ethically, relationally, and experientially.

"AI as Mirror, not Master." — ICC-AI Supervision Report

This next stage expands the Full Intelligence Loop™, integrating real-time linguistic, emotional, and systemic reflection across therapy, coaching, and cultural transformation programmes.

The Liberation AI Agency™ — In Formation

Following three years of research and ecosystem development, the Liberation AI Agency™ is now in formation — preparing for launch in 2026.

Built on verified ICC-AI outcomes, it will serve as a living bridge between healing and infrastructure, helping organisations re-engineer equity through measurable liberation frameworks.

Initial Integration Domains:

ICC™ Culture Redesign — merging mental health, DEEI, and power redistribution

Trauma-Informed Technology Audits — assessing algorithmic safety and cultural bias

Spiral Loop Certification™ Training — teaching organisations to operationalise healing as infrastructure

Proof of Concept Data (from ICC-AI pilots): Leadership confidence +90 % | Team engagement +25 % | Grievance time −41 % | Psychological safety +19 pts

Validated indicators that healing scales — benchmarks the Agency will extend upon at launch.

🌀 Spiral Proof of Motion

This recognition arrives three years after writing White Talking Therapy Can't Think in Black! at the Bethlem Royal Hospital Library — amid psychiatry's oldest archives — broke and erased by the same welfare systems now being redesigned.

That book, archived at Bethlem and a #1 Amazon Bestseller in LGBTQ+ Nonfiction, Mental Health, and Social Sciences, marked the first pulse of this liberation arc — theory forged from survival, data born from lived experience.

This isn't about arrival. It's proof the Spiral works.

Lived experience became methodology. Survival became systemic architecture. The loop closed — and opened again.

🌍 Meta-Purpose

These awards and developments aren't milestones — they're measurements of motion within a living movement.

Each proves that Intersectional Futurism and Liberation Architecture can transform institutions once thought immovable — from mental health to machine learning, from the therapy room to the algorithm.

system Invitation (CTA 3 of 3 — Value Bridge Conversion)

If this edition expanded your thinking, step into the deeper work:

🪶 Personal & leadership transformation: bempongtalkingtherapy.com

🌐 Systemic redesign, AI ethics & cultural innovation: intersectionalfutures.org

Each site opens a different portal of liberation — inner and systemic — both spiralling toward collective healing.

🌀 XIII. Closing Spiral (Signature Echo)

Liberation isn't a finish line. It's design intent.

Surveillance sees patterns. Witnessing sees people.

Care isn't what you monitor. It's what you stop needing to measure.

🌀 Next Week in The Intersect™

Designing AI for the 99.5 % Intersectional design is justice in code.

Surveillance built the scaffolding of control. Liberation Design builds the scaffolding of care.

Next week: how to design AI for the 99.5 % — because justice belongs in the source code.

I'm Jarell Bempong — founder of The Intersectional Majority™ and Bempong Talking Therapy™, creator of ICC™, the Spiral Loop of Liberation™, and Saige Companion™ — the world's first AI-Augmented Liberation Engine™.

Let's build futures where care, culture, and code spiral together.