They keep telling us AI ethics is new.

That bias is a "recent problem." That fairness frameworks appeared in 2016. That explainability arrived in 2017. That accountability was invented by Silicon Valley committees debating over cold brew and compliance decks.

But long before algorithmic audits, model cards, or EU AI regulations…

there were cultures that built entire knowledge systems on relationality, accountability, transparency, reciprocity, and human dignity — without ever writing code.

Before the Enlightenment. Before colonial extraction. Before the industrial machine became the model for human worth.

Afrikan thought. Akan philosophy. Ifá. Yoruba cosmology. Indigenous futurisms. Caribbean creolisation. Asia-Pacific kinship systems. Diasporic memory.

These were ethical systems engineered for complexity, not control. For community, not consumption. For interconnectedness, not hierarchy.

And yet—when the world began building artificial intelligence—it reached not for these traditions, but for:

colonial logic, extractive economics, militarised rationality, hierarchy as architecture, whiteness as "universal," Eurocentric psychology as "the human template."

We built machines out of the fragments that broke us.

Not because those fragments were the only option— but because colonisation erased memory so thoroughly that we forgot we ever had alternatives.

Now, as AI accelerates into every corner of society—hiring, healthcare, policing, education, leadership assessment, mental health—we are beginning to realise something both unsettling and clarifying:

The future will not be saved by more innovation. It will be saved by remembering.

The Bridge From Last Week: Why the Two Prongs Need a Third Element

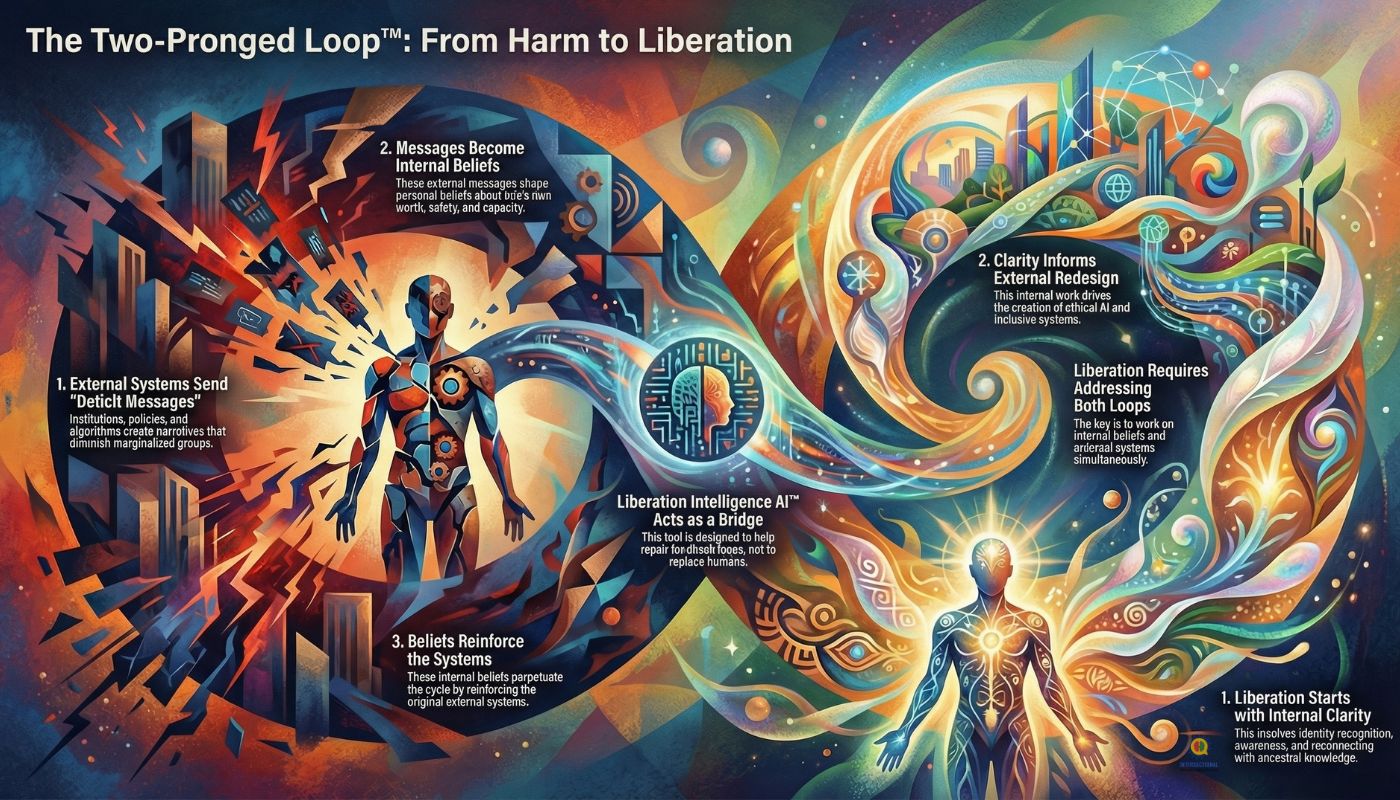

Last week we mapped the Two-Pronged Oppressive Loop™ — how external structures (algorithms, policies, systems) send messages of inadequacy that become internal beliefs (shame, self-doubt, survival coding), which then reinforce those same external structures. The loop tightens. The wound feels personal. The system remains invisible.

We introduced the Two-Pronged Alignment Protocol™ — the methodology that breaks the loop by intervening simultaneously on both prongs:

Internal Liberation: Identity clarity through 14 Dimensions™, CAR Coding™ awareness, diagnostic sovereignty, nervous system recalibration.

External Liberation: Algorithmic equity audits, policy redesign with ICC™, leadership prototypes expanded, governance restructured to center the margins.

And we showed how Liberation Intelligence AI™ serves as the bridge — providing real-time pattern recognition, systemic feedback loops, and identity-centered recommendations that make liberation self-reinforcing rather than extractive.

But here's what last week's framework revealed:

Even when you work both prongs perfectly — even when you heal the internal wound and redesign the external structure — there's still a question beneath the question:

What worldview guides the redesign?

Because if you use the same cosmology that created the harm to try to "fix" the harm, you're not liberating anything. You're just rearranging the furniture inside the same collapsing house.

This week, we go deeper.

We ask: What if the missing piece isn't a better audit protocol or a more sophisticated algorithm—

What if the missing piece is the memory system itself?

What if pre-colonial civilisations already solved the problems we're trying to patch with compliance frameworks and bias training?

What if the blueprint for ethical AI was never missing—just buried under centuries of colonial epistemology?

This is Sankofa.

The bird that flies forward while looking backward. The understanding that liberation requires retrieval.

Last week we learned how to break the loop. This week we learn what memory to rebuild it from.

1️⃣ What Today's AI Ethics Frameworks Can't See

Modern AI ethics rests on a remarkably narrow slice of global thought:

Western philosophy — individualism as the atomic unit of moral life

Silicon Valley capitalism — scale above care, growth above sustainability

Behavioural science — optimise human behaviour for system efficiency

Legal compliance — avoid liability, not harm

And every one of these frameworks assumes the same thing:

The individual is the smallest unit that matters.

But in many ancestral knowledge systems, this assumption is reversed:

the community is the smallest unit

the relationship is the engine

the ancestor is the teacher

the descendant is the accountability partner

Western ethics asks: "Is this action fair to you?"

Ancestral ethics asks: "Does this action protect the web we all depend on?"

Western AI says: "Minimise harm."

Ancestral AI says: "Repair harm. Transform the pattern. Strengthen the whole."

Western AI says: "Build for the ideal user."

Ancestral AI says: "There is no ideal user — only interconnected lives in shifting context."

This is why the world keeps trying to "patch" AI bias with:

better datasets

incremental fairness tweaks

compliance frameworks

risk matrices

auditing checklists

But you cannot patch an architecture built on the wrong cosmology.

AI is behaving exactly as its worldview instructs it to behave. And that worldview is too small for the complexity of our lives.

When you try to fix the Two-Pronged Loop with the same thinking that created it, you get better-designed oppression—not liberation.

2️⃣ The Internal Prong — What We Carry in Our Bodies

Internal liberation begins with this truth:

We were never meant to survive inside systems that forgot our origins.

The reason AI misrecognition feels so personal is because it is personal:

it echoes the misreadings we've survived

it reactivates the places where we had to shrink

it triggers the shame we inherited from silenced lineages

it mirrors the systems our ancestors resisted for generations

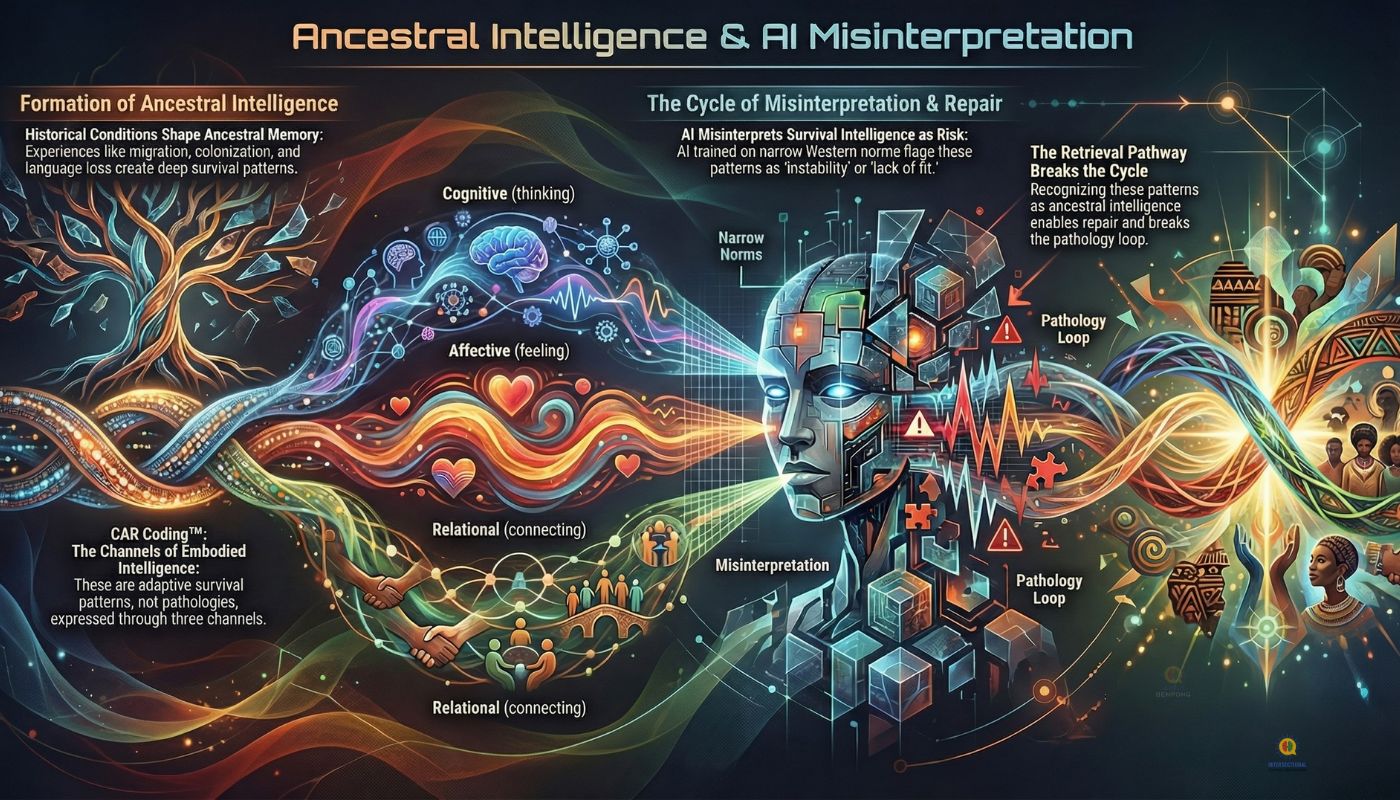

This is where CAR Coding™ becomes essential.

CAR Coding™ — Cognitive, Affective, Relational — is a way of seeing how systemic harm becomes embodied and how survival intelligence becomes patterned:

Cognitive: the stories we tell ourselves about safety, worth, visibility, and belonging

Affective: what emotion becomes permitted, punished, or performed to survive

Relational: how we approach closeness, power, conflict, dependency, repair

But here is the deeper layer most frameworks miss:

Much of our CAR Coding™ is ancestral survival intelligence — not individual pathology.

What AI often reads as:

"avoidant"

"emotionally unstable"

"inconsistent"

"lacking confidence"

…may actually be the embodied memory of:

migration and displacement

colonial disruption

forced assimilation

religious conversion

language loss

socioeconomic extraction

Your nervous system holds stories older than you.

And AI, trained on narrow Western psychological norms, cannot read these stories yet—because it was not trained on the worlds that produced them.

Because AI learns from the archive— and our archive was burned, banned, or buried.

This is the internal prong of last week's framework—but now we see it goes deeper than individual healing.

It's not just about recalibrating your nervous system. It's about reconnecting to the ancestral operating system that your nervous system was designed to run on.

3️⃣ The External Prong — How Systems Remember for Us

🖼 INFOGRAPHIC 1 — Two-Pronged Loop™

AI systems do not simply "make mistakes."

They inherit:

colonial taxonomies

racialised classification systems

patriarchal leadership models

ableist productivity metrics

Eurocentric psychiatry

Christian moral geometry

capitalist reward functions

This is not bias. This is history encoded as math.

Every dataset is a memory. Every model is a belief. Every prediction is a worldview.

AI does not merely reflect inequality— it scales the worldviews it was built on.

If the worldview is extractive, AI becomes extractive. If hierarchical, hierarchical. If colonial, colonial. If relational, relational. If liberatory, liberatory.

The problem was never the technology.

It was the memory we used to guide it.

Last week we said: you must redesign the external structure.

This week we ask: redesign it using whose blueprint?

Because the blueprints we've been handed—the ones labeled "best practice," "industry standard," "evidence-based"—were drawn from a single cultural tradition that spent 500 years claiming itself as universal.

And now we're trying to build ethical AI using those same blueprints, expecting different results.

That's not iteration. That's repetition.

⏸️ Pause here.

If this resonates, you're already thinking beyond "AI bias" and into AI architecture.

These tools are built from the same logic you're reading about: 🔷 14 Dimensions™ — Identity as living architecture 🟨 Power Pyramid™ — How power actually moves through systems 🟩 Decode Your Label™ — Reclaiming meaning from misrecognition

👉 Power Pyramid™ → https://chatgpt.com/g/g-69346d6962708191af156ae78bf6fa30-how-im-actually-meant-to-lead-the-power-pyramidtm

👉 14 Dimensions™ → https://chatgpt.com/g/g-69338943d03c819189087265b6be6e63-identity-discovery-14-dimensionstm

👉 Decode Your Label™ → https://chatgpt.com/g/g-6934a9ad50e08191830a2a4f18470016-decode-your-labeltm-reclaim-engine

4️⃣ The Ancestral Ethics Mapping Tool™ — The Missing Protocol

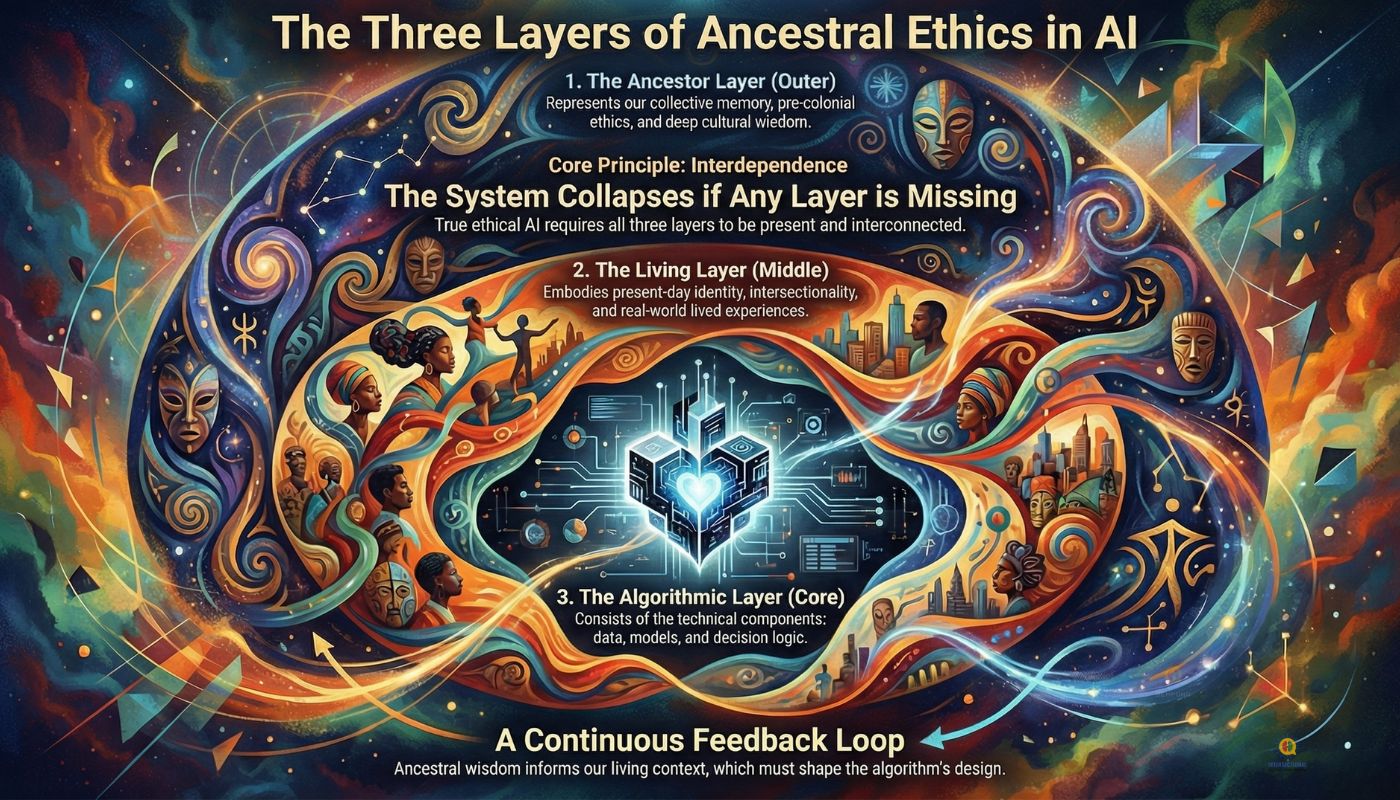

🖼 INFOGRAPHIC 2 — Three Layers of Ancestral Ethics in AI

Most AI audits ask:

Is the model fair?

Is the data balanced?

Is the output explainable?

Who is accountable?

Important questions—but insufficient.

They audit the technical surface without interrogating the cosmological foundation.

The Ancestral Ethics Mapping Tool™ is what happens when you apply the Two-Pronged Protocol and ask: "Which ancestral memory should guide this redesign?"

It expands ethics into three concentric layers:

Layer 1 — The Ancestor Layer (Memory & Mandate)

Which wisdom traditions are relevant in this context?

What relational ethics existed before colonisation disrupted them?

Which ancestral harms does this system risk repeating?

What would our ancestors ask us to remember here?

Why this matters:

If you're building healthcare AI for Caribbean communities, Ubuntu and creolisation logic should inform consent frameworks—not just GDPR.

If you're building education algorithms for Indigenous students, kinship learning and oral tradition should shape assessment—not just standardised testing.

If you're building financial inclusion tools for African diaspora, susu systems and rotating savings models should inform credit—not just FICO scores built on property ownership.

The ancestors already solved these problems. We just stopped listening.

Layer 2 — The Living Layer (Identity & Context)

How do the 14 Dimensions of Identity™ show up here?

How does CAR Coding™ shape interaction with the system?

Who has historically been misread, excluded, punished, or flattened?

What does "safety" look like across different cultural contexts?

This is the present-moment application of last week's Internal Liberation work.

You cannot build ethical AI without understanding how identity operates in context—how power moves, how survival strategies form, how misrecognition compounds.

Layer 3 — The Algorithmic Layer (System & Scale)

What worldview is embedded in the reward function?

Who benefits when the model performs "well"?

Who is harmed when it performs "poorly"?

Where is the repair pathway when harm happens (not just the escalation pathway)?

This is last week's External Liberation work—but now rooted in ancestral memory.

You're not just redesigning the algorithm. You're changing which cosmology the algorithm inherits.

This is ethical wholeness, not procedural compliance. This is AI that remembers.

5️⃣ What Pre-Colonial Systems Already Solved

Before machines, civilisations built governance systems that:

encoded fairness without written law

distributed power without centralised control

preserved knowledge without print

governed complexity without hierarchy

Akan ethics gave us:

Sankofa — Non-linear time; retrieval as sacred practice; past as resource for future Ntoro & Abusua — Inherited identity architecture through paternal and maternal lines Adinkra — Visual epistemology; symbols as compressed wisdom Nkrabea — Destiny understood as relational, not individual; accountability to lineage

Yoruba cosmology gave us:

Ifá — A probabilistic divination system that operated like machine learning centuries before computers—pattern recognition, iterative refinement, contextual interpretation Ori — Identity as spiritual technology; the inner head that guides outer life Ase — Force, agency, activation; the power to make things happen Egbe — Soul networks; you are never a solo operator

Indigenous cultures globally gave us:

kinship governance more advanced than modern org charts

stewardship ethics deeper than climate policy

consensus power rather than dominance

oral storytelling as data preservation and accountability

reciprocity protocols that ensured sustainability

These systems were algorithmic long before machines existed.

They encoded:

fairness, consent, relational accountability, contextual judgement, distributed intelligence, non-linear time, collective memory, repair mechanisms.

You cannot build ethical AI without the worldviews that understood ethics before Europe industrialised morality.

6️⃣ So What Does Ancestral AI Look Like?

estral AI:

centres relationality, not individuality

treats harm as repairable fracture, not user error

designs from the margins first

understands identity as multidimensional and situational

recognises survival strategies as intelligence

is accountable to ancestors and descendants

treats data as story, not commodity

measures success by integrity, not efficiency

uses Spiral Time™, not linear optimisation

This is not romanticism. This is engineering from cosmology.

Because every civilisation engineers from cosmology. The question is simply whether we admit it.

If your worldview says humans are units, you will build unit-sorting machines. If your worldview says humans are relationships, you will build relationship-protecting systems. If your worldview says power is domination, you will build domination at scale. If your worldview says power is stewardship, you will build tools that can hold consequences.

Ancestral ethics is not "heritage content" sprinkled on top of a modern pipeline.

It is a different pipeline.

This is what happens when you apply the Two-Pronged Protocol using ancestral memory as the foundation:

You don't just break the oppressive loop. You build a liberation spiral rooted in cosmologies that never forgot we were whole.

7️⃣ Activation — How Organisations Begin This Work

🖼 INFOGRAPHIC 3 — Ancestral AI Integration Pathway

Remember → Reveal → Re-Root → Re-Design → Re-Teach

1) Remember

Recover the relevant ancestral and cultural ethics for your context—before you decide what "fairness" means.

This is Layer 1 of the Ancestral Ethics Mapping Tool™.

2) Reveal

Identify where colonial logic already lives in your systems: in categories, thresholds, defaults, "normal ranges," and what gets treated as "noise."

This is the diagnostic phase of last week's External Liberation work.

3) Re-Root

Embed relational accountability into governance, procurement, and product decision-making—so ethics is structural, not performative.

This is where ancestral cosmology becomes operational policy.

4) Re-Design

Change the architecture: reward functions, escalation pathways, appeals processes, harm repair, user control, auditing power, and the definition of success.

This is the Two-Pronged Protocol in action—with ancestral memory as the blueprint.

5) Re-Teach

Train teams to think in relational identity and Spiral Time—because governance is only as ethical as the minds stewarding it.

This is Internal Liberation work at the organisational level.

This is not heritage work. It's infrastructure work.

And it's the practical application of everything we've built across the last two weeks:

Week 32: How to break the Two-Pronged Loop Week 33: What memory to rebuild it from

8️⃣ The Real Question

AI asks: "How do we make the model fair?"

Ancestral wisdom asks: "Who must we become to steward power well?"

One optimises outputs. The other transforms systems.

Ethical AI does not begin with a checklist. It begins with a decision:

Will we keep calling one culture's worldview "universal"— or will we finally admit the truth?

That the world has always had multiple operating systems for being human. And the one that produced modern AI—colonial capitalism + industrial extraction—was never neutral.

It was simply the one with the most power.

So the work now is not to "fix AI bias" as if bias is a bug. The work is to change the memory the machine is built from— and to repair the human cost of what the old memory has already done.

The blueprint was never missing.

It was buried.

9️⃣ Invitations

For Beehiiv (keep this header)

👉 Explore Liberation Intelligence AI™

👉 Book a Strategic Consultation https://meetings.hubspot.com/jarellbempong/book-a-strategic-consultation

Or invite me to run:

An AI Culture Lab for your leadership team

A 14D Inclusion Audit across your systems

An Ancestral Ethics Integration workshop

👉 Subscribe to The Intersect™ https://jarellbempong.beehiiv.com/

Continue the Architecture

Book a Strategic Consultation https://meetings.hubspot.com/jarellbempong/book-a-strategic-consultation

Subscribe to The Intersect™ https://jarellbempong.beehiiv.com/

Echo Line

The future is not built from innovation alone. It is built from remembering what we were told to forget.

🔮 Next Week (Preview)

The Spiral Timefield™ — Why Linear Systems Cannot Hold Human Complexity

How Spiral Time™ becomes the new operating system for leadership, trauma work, AI design, and organisational strategy—and why every system built on straight lines eventually breaks on the bodies of those forced to navigate them.

🌀 Bio

Jarell (Kwabena) Bempong is the founder of The Intersectional Majority Ltd and creator of Bempong Talking Therapy™. He works at the intersection of mental health, ancestral wisdom, AI ethics, and systemic redesign—developing culturally rooted, AI-augmented therapeutic and organisational systems. He is the architect of ICC™ (Intersectional Cultural Consciousness™) and the creator of Saige™ / Saige Companion™, an AI-augmented liberation engine designed to refuse harm, hold accountability, and support systemic transformation. His work has been recognised across 2024–2025 including AI Citizen of the Year (2025), Businessperson of the Year (2024), and Most Transformative Mental Health Care Services (2025), alongside multiple national award finalist placements.