They promised AI would make workplaces fairer.

They promised automation would remove bias.

They promised algorithms couldn't discriminate because they didn't have feelings.

But when we look closely at the systems now shaping hiring, healthcare, leadership, education, policing, and even mental health, we see something undeniable:

AI isn't neutral. AI is obedient.

Obedient to the culture that built it. Obedient to the histories embedded in its data. Obedient to the identities it was trained to recognise — and the ones it was trained to overlook.

And when a system cannot see you, it cannot serve you.

You cannot heal a wound that's still being inflicted. Until we name the hands, patterns, and systems shaping AI, we cannot build AI cultures people can trust.

This is where the next era of DEI begins — not in compliance statements or posters on the wall, but in the architecture of the intelligent systems now mediating opportunity, safety, and care.

The question isn't whether AI will shape the future of equity work — it already is. The question is whether we will shape AI in return.

And beneath that sits a deeper question:

What if the real question isn't whether your organisation is ready for AI… but whether your AI is ready for your people?

1️⃣ When Bias Goes Digital

There is a myth that technology is "objective."

But bias doesn't disappear in digital spaces — it scales.

A hiring manager's habits become a ranking system. A school's prejudice becomes a predictive score. A therapist's cultural misattunement becomes a wellness script. A boardroom's homogeneity becomes a leadership model.

AI doesn't invent inequality. It automates whatever the world already believes.

The Algorithmic Mirror

Train a hiring model on ten years of "successful" employees and it doesn't learn to spot talent; it learns to spot proximity — to race, class, body, accent, education, and neurotype. Names become risk indicators. Postcodes become proxies for class. Gaps in work history — often caused by care, illness, or systemic exclusion — become red flags instead of evidence of resilience.

The algorithm becomes a mathematically polished mirror of historical inequity, dressed in the language of optimisation.

This is algorithmic monoculture — when systems are trained almost entirely on dominant-identity data and then deployed universally, as if one pattern of success represents all patterns of success.

Velocity, Reach, and the Trust Crisis

A biased manager might misjudge 200 people a year. A biased model can misread 200,000 in an afternoon.

When bias is encoded, it becomes infrastructure. And infrastructure is invisible until it breaks — usually on the people least resourced to challenge it.

Public trust in AI — especially in public services — is low for good reason. People have watched systems fail them before. They are not afraid of technology; they are afraid of repetition:

tools trained on biased data,

algorithms that scale existing harm,

"black box" systems where no one seems accountable.

This is why the future of DEI cannot be separated from the future of AI. Our task now is to prove that AI can break historical patterns of harm, not simply accelerate them.

2️⃣ What AI Gets Wrong About Culture

The problem isn't only that AI is biased.

The problem is deeper: AI has inherited a worldview.

That worldview assumes a narrow "normal": how a professional speaks, how a leader behaves, how distress presents, what a "good citizen" looks like.

I know this rupture from the inside.

I'm a Black, British-Ghanaian, gay, dyslexic, neurodivergent man who was repeatedly misread by systems that couldn't see my mind. Schools labelled my divergence "deficit." Workplaces called my communication "unprofessional." Healthcare systems pathologised cultural expressions of distress they couldn't recognise.

I didn't fail those systems. Those systems failed to build the perceptual architecture to see me whole.

Today I work as a therapist, AI cultural strategist, and liberation architect. Frameworks like ICC™ (Intersectional Cultural Consciousness), the Spiral Operating System™, the 14D Identity Matrix™, and Bempong Talking Therapy™ all emerged from that lived misrecognition.

CAR Coding™ — How Survival Shows Up in the System

Many of the people I work with carry patterned ways of surviving that show up across three channels:

Cognitive — how they think about themselves and the world

Affective — how emotions are felt, expressed, or suppressed

Relational — how they move in connection with others

This is CAR Coding™ — Cognitive, Affective, Relational.

Inside those three channels, people often learn strategies like concealment, assimilation, and self-repression to stay safe in systems that were never built for them. You adjust your accent, modulate your emotional range, change your pace, flatten your body language, translate your experience — constantly tuning yourself to someone else's comfort.

These strategies are not dysfunction. They are intelligent adaptation to hostile environments, encoded cognitively, affectively, and relationally.

But when AI enters, those very survival patterns get frozen into data and misread as:

"low confidence,"

"poor leadership potential,"

"emotional instability,"

"lack of cultural fit."

AI doesn't see the history behind the behaviour. It only sees deviation from the template.

🧠 INTERNAL ARCHITECTURE (CAR Coding™ — cognitive, affective, relational channels; survival strategies → misrecognition → digital harm)

Without cultural consciousness, AI treats trauma as noise and dominant culture as signal. That is how harm becomes invisible — and scalable.

3️⃣ The Turning Point: When Insight Becomes Infrastructure

There is a recognisable moment when a leader looks at their systems and realises:

"This isn't a technical issue. This is cultural architecture."

It's the moment someone understands that their exhaustion isn't an individual weakness; it's a feature of the design. Their invisibility isn't random; it's coded into the evaluation criteria.

In Akan thought we have Sankofa — the bird flying forward while looking back. Liberation requires this spiral posture: returning to the past to retrieve what we need for the future.

Applied to AI, this means:

interrogating whose data trained the system,

whose outcomes defined "success,"

whose stories were never recorded at all.

This is where ICC™ comes in. ICC doesn't treat identity as a checklist; it sees fourteen interacting dimensions — race, class, gender, disability, neurotype, body, migration story and more — shifting depending on context and power.

When AI is built without ICC™, it can only see categories, not context; demographics, not dynamic identity. It sees dots on a graph where real lives are unfolding.

The question is no longer, "Is AI biased?"

The real question is:

"What would AI look like if it were designed from the margins outward?"

4️⃣ Three Moves Every Organisation Must Make Now

Fairness isn't a side effect. It has to be engineered.

Here are the three foundational moves of algorithmic equity — the entry layer of my wider AI for DEI Audit Protocol™.

1️⃣ Audit What the Algorithm Thinks "Normal" Looks Like

Every model begins with a template, usually inherited from vendors or legacy systems.

Ask:

Who is the default user here? Be specific: race, class, gender, language, neurotype.

What behaviours does the model reward? Assertiveness over collaboration? Speed over reflection?

Whose faces, voices, and patterns dominate the training data?

Who disappears inside the averages?

If you had to sketch the person this system serves best, who would you draw?

Until you can answer that, you don't know what your algorithm is doing — only what it's supposed to be doing.

2️⃣ Map Where the Algorithm Goes Silent

Bias appears not just in the outputs, but in the gaps:

low-confidence predictions clustered around certain identities,

populations that generate frequent false positives (e.g. security, fraud, or risk),

data points constantly misclassified or forced into ill-fitting categories.

These aren't random glitches. They're the coordinates of structural risk.

Wherever the system hesitates, over-corrects, or misfires, ask: Which identities live here? Which histories are missing from the training data?

This is where redesign must begin.

3️⃣ Prototype the Liberated System

At some point, you have to stop trying to "fix" the old architecture and start building the new one.

Ask:

What if ICC™ and intersectionality were built into the model from the outset?

What if misrecognition triggered reflection and repair, not punishment?

What if leadership models were based on many culturally-rooted ways of leading, not a single colonial prototype?

What if personalisation honoured identity rather than erasing it?

Liberation doesn't emerge from tiny tweaks to unjust structures. It comes from designing different structures.

🏛️ EXTERNAL ARCHITECTURE (Systemic patterns: power, bias pathways, algorithmic monoculture)

5️⃣ Liberation Intelligence AI™ — A New Behavioural Class

Over 50 serious mental health incidents have already been linked to mainstream AI tools. When people seek help and are misread — again — by systems with no cultural context, the wound deepens.

So I took a different path.

Over the weekend, I released the first three Liberation Intelligence AI™ species on the ChatGPT Store. These aren't generic productivity bots. They are liberation tools, built from ICC™, Spiral logic, and therapeutic ethics.

Each one is trained to:

hold boundaries,

see structure, not just symptoms,

protect identity instead of flattening it,

centre the margins rather than the mean.

Meet the First 3 Species

🔷 14 Dimensions™ — Identity Discovery ➡️ https://chatgpt.com/g/g-69338943d03c819189087265b6be6e63-identity-discovery-14-dimensionstm

Maps identity across fourteen dimensions so people and organisations can finally see the full architecture of who is in the room — and who systems have never been built to recognise.

🟨 Power Pyramid™ — How I'm Actually Meant to Lead ➡️ https://chatgpt.com/g/g-69346d6962708191af156ae78bf6fa30-how-im-actually-meant-to-lead-the-power-pyramidtm

Reveals how power, identity, and context shape leadership — so we stop forcing people into one prototype and start designing roles around their actual architecture.

🟩 Decode Your Label™ — Reclaim Engine ➡️ https://chatgpt.com/g/g-6934a9ad50e08191830a2a4f18470016-decode-your-labeltm-reclaim-engine

Interrogates any diagnostic or descriptive label through a liberation lens, separating survival intelligence from pathology and returning narrative sovereignty to the person being labeled.

These three tools are the first public glimpse of the wider Saige Companion™ ecosystem — AI designed not to extract from us, but to liberate us.

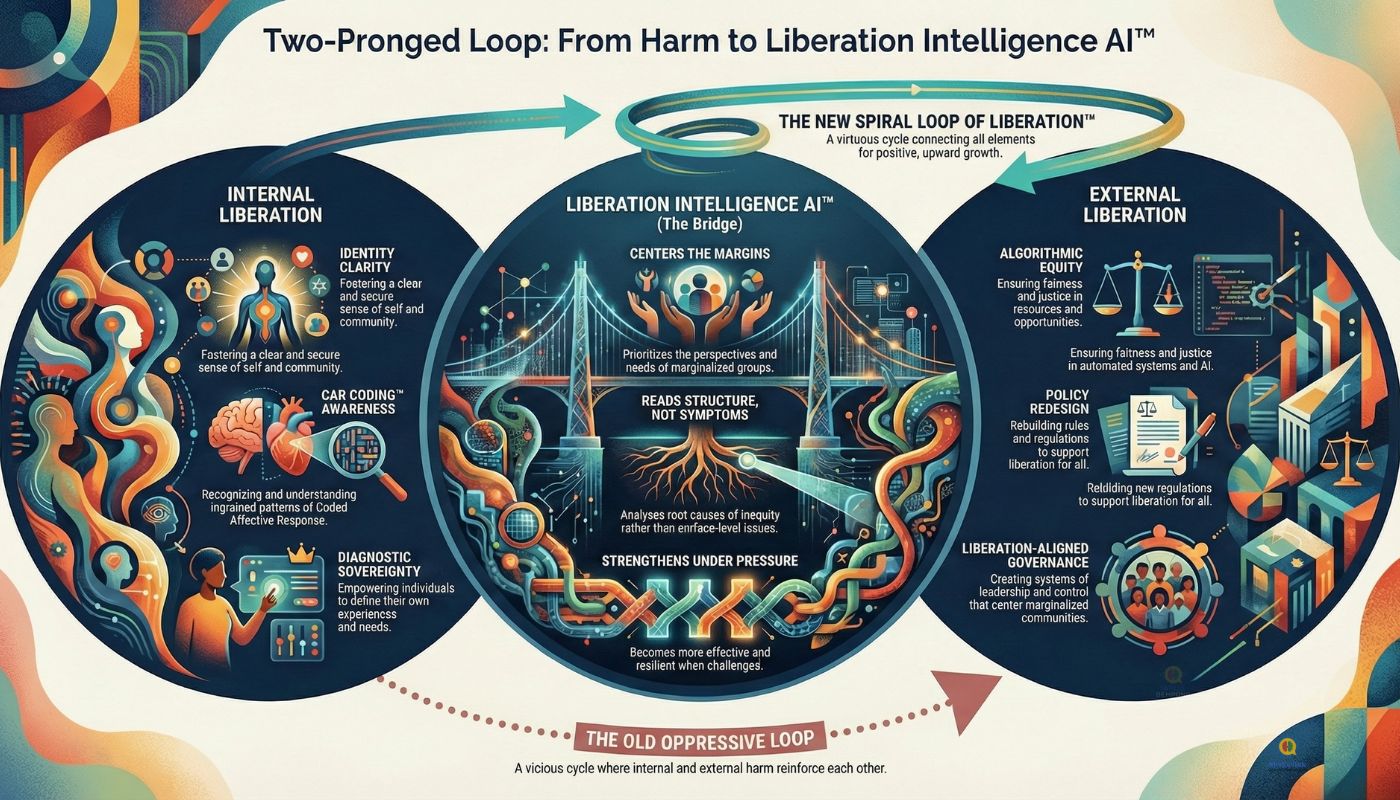

🔄 BRIDGE DIAGRAM (Two-Pronged Loop™, Convergence Collapse™, transformation pathway)

6️⃣ The AI Culture Triad™ — Your Blueprint for Trust

If you want people to trust your AI, you must build an AI culture worth trusting.

TRANSPARENCY — Show the Path Make data sources, model logic, and decision chains visible. People don't need perfection; they need honesty and traceability.

TRUST — Be Explicit Explain clearly what the system can and cannot do. No jargon smokescreens. No hiding behind "the algorithm decided."

BELONGING — Design From the Margins First Co-design with communities most likely to be harmed — disabled, neurodivergent, Black, Brown, LGBTQ+, migrant, older, low-income. When they are safe, everyone is safer.

Inclusion isn't a slide in the deck. It's the operating system.

7️⃣ Your Activation Pathway

Here's a practical spiral pathway for organisations ready to act:

MAP – Audit where harm, mistrust, and misrecognition already live.

FRAME – Position AI as augmentation, not replacement.

CO-DESIGN – Bring the margins into the architecture phase, not the sign-off stage.

LISTEN – Build real feedback loops with repair, not just "contact us" forms.

UPSKILL – Grow AI + equity literacy across leadership and frontline teams.

LEVERAGE – Use procurement power to demand ethical, transparent systems from vendors.

This path is spiral, not linear. You'll return to these steps as your systems and consciousness evolve.

8️⃣ From Fear → Trust → Future

The journey from fear to future isn't about persuading people that AI is harmless. It's about building systems that deserve their trust.

Trust grows when organisations:

centre those most at risk in design,

build repair into infrastructure,

hold themselves accountable for outcomes,

and treat inclusion as core engineering, not decoration.

You are not a passive user of AI.

You are part of The Intersectional Majority™ — the 89.5% whose experiences have rarely set the template for power.

For centuries, systems were designed by the few, for the few, using the few as the reference point. The rest of us learned to survive inside infrastructures never meant for our thriving.

For the first time, we have the technical capacity to rewrite those infrastructures.

AI can finally learn from you. Systems can finally recognise you. Futures can finally include you.

But only if we author them intentionally.

9️⃣ The Invitation

If you're still reading, you're already in the next spiral of your leadership.

These principles begin the inner shift and outer redesign — but they're just the entry layer.

The full AI for DEI Audit Protocol™ extends into:

structural redesign,

algorithmic recalibration rooted in ICC™,

cultural integration across leadership,

identity-centred governance and oversight.

If you're ready to bring this into your organisation or sector:

👉 Book a Strategic Consultation https://meetings.hubspot.com/jarellbempong/book-a-strategic-consultation

Or invite me to run:

an AI Culture Lab for your leadership team,

a 14D Inclusion Audit across your systems.

Let's architect systems that can actually see us.

🔟 The Identity Shift — The Future Starts With Who Is Seen

This isn't about optimisation. This is about authorship.

In my Akan heritage we say: "Se wo were fi na wosankofa a, yenkyi." It is not wrong to go back for that which you have forgotten.

The future we need isn't brand new. It's a remembering: relational ways of knowing, circular time, collective care, the truth that I am because we are.

Technology doesn't have to be extractive. That's a cultural choice, not a technical law.

What if AI held memory the way elders do — contextual, relational, with room for contradiction and growth?

What if our systems moved with Ubuntu — recognising that harm to one is harm to all?

What if we designed in spirals instead of straight lines — understanding that healing, learning, and justice all move in loops?

This is the mandate: to build technology that remembers we are human.

Not "users." Human. Whole, intersectional, cultural, ancestral, relational, sacred.

Echo Line

Liberation isn't a finish line — it's design intent.

Jarell Bempong is a transformational architect working at the intersection of mental health, cultural consciousness, AI ethics, and systemic redesign. He is the founder of The Intersectional Majority™, creator of Bempong Talking Therapy™, developer of ICC™, and architect of Saige Companion™ — the world's first AI-Augmented Liberation Engine™.

His work has been recognised with AI Citizen of the Year 2025, Businessperson of the Year 2024, and Most Transformative Mental Health Care Services 2025. He is a four-time National AI Awards finalist. He brings British-Ghanaian heritage, neurodivergent cognition, and ancestral wisdom into conversation with cutting-edge AI development — building frameworks that honour the fullness of who we are while redesigning the systems we live inside.

Newsletter → jarellbempong.beehiiv.com

Email → [email protected]

Explore the species: Power Pyramid™, 14 Dimensions™, Decode Your Label™

🔮 Next Week — Preview The Two-Pronged Spiral of Liberation™ — how internal beliefs and external structures reinforce each other, and how to break the loop using the Two-Pronged Alignment Map™.